saversites

Member-

Posts

65 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Downloads

Gallery

Store

Everything posted by saversites

-

Hi, I have a form field where I prompt user for tgheir domain names. Now, I need to filter it so user inputs valid domain. How to do it ? This ain't working: $primary_website_domain = filter_var(trim($_POST["primary_website_domain"],FILTER_SANITIZE_DOMAIN)); $primary_website_domain_confirmation = filter_var(trim($_POST["primary_website_domain_confirmation"],FILTER_SANITIZE_DOMAIN));

-

Thank you Stef. I have not tried on a live server. I guess I should do that first! Cheers!

-

Php Experts, I need to fetch not the last row in the table but the last entry based on a condition. I only know how to fetch the last row in the table. I tried coding the way I think it is done but no luck. Googled but no luck. $query_for_today_date_and_time = "SELECT date_and_time FROM logins WHERE username = ? ORDER BY id DESC LIMIT 1"; if($stmt_for_today_date_and_time = mysqli_prepare($conn,$query_for_today_date_and_time)) { mysqli_stmt_bind_param($stmt_for_today_date_and_time,'s',$db_username); mysqli_stmt_execute($stmt_for_today_date_and_time); $result_for_today_date_and_time = mysqli_stmt_bind_result($stmt_for_today_date_and_time,$db_today_date_and_time); mysqli_stmt_fetch($stmt_for_today_date_and_time); $today_date_and_time = $db_today_date_and_time; mysqli_stmt_close($stmt_for_today_date_and_time); } else { mysqli_stmt_close($stmt_for_today_date_and_time); mysqli_close($conn); die("<h1 style=\"font-family:courier; color:red; text-size:25%; text-align:center\"><b> ERROR STATEMENT 11: Sorry! Our system is currently experiencing a problem! </b></h1> <br> "); }

-

The following fails to grab the user's real ip. I testing on my Xamp (localhost). The code is supposed to grab real ip even if user hiding behind proxy. Why not showing my dynamic ip ? I can see my ip on whatismyip.com but that code fails to show it. I been testing on localhost using Mini Proxy. function getUserIpAddr() { if(!empty($_SERVER['http_client_ip'])){ //IP from Shared Internet $ip = $_SERVER['HTTP_CLIENT_IP']; }elseif(!empty($_SERVER['HTTP_X_FORWARDED_FOR'])){ //IP from Proxy $ip = $_SERVER['HTTP_X_FORWARDED_FOR']; }else{ $ip = $_SERVER['REMOTE_ADDR']; } return $ip; } echo 'User real Ip - '.getUserIpAddr(); I get this as output: ::1 What does that output mean ?

-

Hi tef,

Can you kindly respond to my thread. It is an interesting topic.

-

Folks, I need to auto submit urls one by one to my mysql db via my "Link Submission" form. The Link Submission form will belong to my future searchengine which I am currently coding with php for my php learning assignment. For simplicity's sake, let's forget my searchengine project and let's assume I have a web form on an external website and I need it filled with peoples' personal details. Say, the external website form looks like this: <form name = "login_form" method = "post" action="yourdomain.com/form.php" enctype = "multipart/form-data"> <fieldset> <legend>Log In</legend> <label>First Name: <input type="text"></label> <label>Surname: <input type="text"></label> <input type="radio" name="sex" id="male"> <label for="male">Male</label> <input type="radio" name="sex" id="female"> <label for="female">Female</label> <input type="checkbox" name="programming_laguages" id="programming_laguages"> <label for="php">Php</label> <input type="php" name="php" id="php"> <label for="python">Python</label> <input type="python" name="python" id="python"> <label for="country">Country:</label> <select name="country" id="country"> <option value="usa">USA</option> <option value="uk">UK</option> </select> <label for="address">Address:</label> <textarea rows="3" cols="30" name="comment" id="comment"></textarea> <label for="file-select">Upload:</label> <input type="file" name="upload" id="file-select"> <input type="submit" value="Submit"> <input type="reset" value="Reset"> <input type="submit" value="Submit"> </fieldset> </form> As you can see, the form has the following input fields: text, radio button, checkbox, dropdown, textarea, rest button and submit button. Now imagine I have peoples' personal details listed on an array like this: $first_name = array("Jack", "Jane"); $surname = array("Smith", "Mills"); $gender = array("Male", "Female"); $programming_languages = array("Php", "Php"); $country = array("USA", "USA"); $address = array("101 Piper St, New Jersey, USA", "52 Alton Beech Rd, Califorinia, USA"); $files_and_paths = array("C:\\Desktop\address.txt", "C:\\My Documents\address.doc"); Now, I need the php script to grab all the data from the array and submit them one by one on the form input fields and submit the form. In our example, the array has 2 values each and so the first person;s (eg. Jack) details must be submitted first on the first round and on the second round submit the female's (Jane) details. I do not know how to achieve my purpose and so kindly advise with php sample codes. I need to learn: 1. Which php and cURL functions type into text input fields; 2. Which php and cURL functions select radio button options matching with the then array value; 3. Which php and cURL functions select checkbox options matching with the then array value; 4. Which php and cURL functions select dropdown options matching with the then array value; 5. Which php and cURL functions click buttons (reset, submit, file upload); 6. Which php and cURL functions auto upload file matching with the file name found in the file path that is listed in the then array value. I would appreciate any snippets of codes samples or better a code sample that teaches me how to do all this. I found no proper tutorial covering all these TASKS I mentioned. I need immediate attention. Thanks

-

Php Experts, For some reason I can't get the "if(file_exists" to work. I don't want the user uploading the same file again. If he tries then should get error alert: "Error: You have already uploaded a video file to verify your ID!" On the comments, I have written in CAPITALS such as: IS THIS LINE CORRECT ? IS THIS LINE OK ? IS LINE OK ? CORRECT ? I need your attention most on those particular lines to tell me if I wrote those lines correct or not. Those are the places where I need your attention the most to tell me if I made any mistakes on those lines or not and if so then what the mistakes are. If you spot any other errors then kindly let me know. I'd appreciate sample snippets (as your corrections to my mistakes on the mistaken code lines) to what you think I should substitute my lines to. Thank You! Here is my attempt: <?php //Required PHP Files. include 'config.php'; include 'header.php'; include 'account_header.php'; if (!$conn) { $error = mysqli_connect_error(); $errno = mysqli_connect_errno(); print "$errno: $error\n"; exit(); } if($_SERVER["REQUEST_METHOD"] == "POST") { //Check whether the file was uploaded or not without any errors. if(!isset($_FILES["id_verification_video_file"]) && $_FILES["id_verification_video_file"]["Error"] == 0) { $Errors = Array(); $Errors[] = "Error: " . $_FILES["id_verification_video_file"] ["ERROR"]; print_r($_FILES); ?><br><?php print_r($_ERRORS); exit(); } else { //Feed Id Verification Video File Upload Directory path. $directory_path = "uploads/videos/id_verifications/"; //Make Directory under $user in 'uploads/videos/id_verifications' Folder. if(!is_dir($directory_path . $user)) //IS THIS LINE CORRECT ? { $mode = "0777"; mkdir($directory_path . $user, "$mode", TRUE); //IS THIS LINE CORRECT ? } //Grab Uploading File details. $Errors = Array(); //SHOULD I KEEP THIS LINE OR NOT ? $file_name = $_FILES["id_verification_video_file"]["name"]; $file_tmp = $_FILES["id_verification_video_file"]["tmp_name"]; $file_type = $_FILES["id_verification_video_file"]["type"]; $file_size = $_FILES["id_verification_video_file"]["size"]; $file_error = $_FILES['id_verification_video_file']['error']; //Grab Uploading File Extension details. $file_extension = pathinfo($file_name, PATHINFO_EXTENSION); //if(file_exists("$directory_path . $user/ . $file_name")) //IS THIS LINE CORRECT ? if(file_exists($directory_path . $user . '/' . $file_name)) //RETYPE { $Errors[] = "Error: You have already uploaded a video file to verify your ID!"; exit(); } else { //Feed allowed File Extensions List. $allowed_file_extensions = array("mp4" => "video/mp4"); //Feed allowed File Size. $max_file_size_allowed_in_bytes = 1024*1024*100; //Allowed limit: 100MB. $max_file_size_allowed_in_kilobytes = 1024*100; $max_file_size_allowed_in_megabytes = 100; $max_file_size_allowed = "$max_file_size_allowed_in_bytes"; //Verify File Extension. if(!array_key_exists($file_extension, $allowed_file_extensions)) die("Error: Select a valid video file format. Select an Mp4 file."); //Verify MIME Type of the File. elseif(!in_array($file_type, $allowed_file_extensions)) { $Errors[] = "Error: There was a problem uploading your file $file_name! Make sure your file is an MP4 video file. You may try again."; //IS THIS LINE CORRECT ? } //Verify File Size. Allowed Max Limit: 100MB. elseif($file_size>$max_file_size_allowed) die("Error: Your Video File Size is larger than the allowed limit of: $max_file_size_allowed_in_megabytes."); //Move uploaded File to newly created directory on the server. move_uploaded_file("$file_tmp", "$directory_path" . "$user/" . "$file_name"); //IS THIS LINE CORRECT ? //Notify user their Id Verification Video File was uploaded successfully. echo "Your Video File \"$file_name\" has been uploaded successfully!"; exit(); } } } ?> <form METHOD="POST" ACTION="" enctype="multipart/form-data"> <fieldset> <p align="left"><h3><?php $site_name ?> ID Video Verification Form</h3></p> <div class="form-group"> <p align="left"<label>Video File: </label> <input type="file" name="id_verification_video_file" id="id_verification_video_file" value="uploaded 'Id Verification Video File.'"></p> </div> </fieldset> <p align="left"><button type="submit" class="btn btn-default" name="id_verification_video_file_submit">Submit!</button></p> </form> </body> </html>

-

Why I See Blank Page After Submitting Form And Why Echoing Fails ?

saversites replied to saversites's topic in PHP

I modified the script but no luck! One year has passed! Hi, Below is a membership or account registration page script. I need to get the User to type the password twice. Final one as the confirmation. I tried both the following for the "Not Equal To" operator after inputting mismatching inputs into the password input field and the password confirmation (re-type password field) field and none of them work as I do not get the alert that the passwords don't match. != !== My troubled lines: 1st Attempt no luck: if ($password != $password_confirmation) { echo "Passwords don't match!"; exit(); 2nd Attempt no luck either: if ($password !== $password_confirmation) { echo "Passwords don't match!"; exit(); Even if I rid the exit(), still no luck to get the error that the passwords do not match. My full script: <?php //Required PHP Files. include 'config.php'; include 'header.php'; //Step 1: Before registering user Account, check if User is already registered or not. //Check if User is already logged-in or not. Get the login_check() custom FUNCTION to check. if (login_check() === TRUE) { die("You are already logged-in! No need to register again!"); } if ($_SERVER['REQUEST_METHOD'] == "POST") { //Step 2: Check User submitted details. //Check if User made all the required inputs or not. if (isset($_POST["fb_tos_agreement_reply"]) && isset($_POST["username"]) && isset($_POST["password"]) && isset($_POST["password_confirmation"]) && isset($_POST["fb_tos"]) && isset($_POST["primary_website_domain"]) && isset($_POST["primary_website_domain_confirmation"]) && isset($_POST["primary_website_email_account"]) && isset($_POST["primary_website_email_account_confirmation"]) && isset($_POST["primary_website_email_domain"]) && isset($_POST["primary_website_email_domain_confirmation"]) && isset($_POST["first_name"]) && isset($_POST["middle_name"]) && isset($_POST["surname"]) && isset($_POST["gender"]) && isset($_POST["age_range"]) && isset($_POST["working_status"]) && isset($_POST["profession"])) { //Step 3: Check User details for matches against database. If no matches then validate inputs to register User Account. //Create Variables based on user inputs. $fb_tos_agreement_reply = trim($_POST["fb_tos_agreement_reply"]); $username = trim($_POST["username"]); $password = $_POST["password"]; $password_confirmation = $_POST["password_confirmation"]; $primary_website_domain = trim($_POST["primary_website_domain"]); $primary_website_domain_confirmation = trim($_POST["primary_website_domain_confirmation"]); $primary_website_email_account = trim($_POST["primary_website_email_account"]); $primary_website_email_account_confirmation = trim($_POST["primary_website_email_account_confirmation"]); $primary_website_email_domain = trim($_POST["primary_website_email_domain"]); $primary_website_email_domain_confirmation = trim($_POST["primary_website_email_domain_confirmation"]); $primary_website_email = "$primary_website_email_account@$primary_website_email_domain"; $first_name = trim($_POST["first_name"]); $middle_name = trim($_POST["middle_name"]); $surname = trim($_POST["surname"]); $gender = $_POST["gender"]; $age_range = $_POST["age_range"]; $working_status = $_POST["working_status"]; $profession = $_POST["profession"]; $account_activation_code = sha1( (string) mt_rand(0, 99999999)); //Type Casted the INT to STRING on the 11st parameter of sha1 as it needs to be a string. //$account_activation_link = "http://www.".$site_domain."/".$social_network_name."/activate_account.php?primary_website_email="."$primary_website_email_account $primary_website_email_domain"."&account_activation_code=".$account_activation_code.""; $account_activation_link = "http://www.\".$site_domain.\"/\".$social_network_name.\"/activate_account.php?primary_website_email=\".\"$primary_website_email_account\".\"@\".\"prmary_website_domain\".\"&account_activation_code=\".\"account_activation_code\""; $account_activation_status = 0; //1 = active; 0 = inactive. $hashed_password = password_hash($password, PASSWORD_DEFAULT); //Encrypt the password. //Select Username and Email to check against Mysql DB if they are already regsitered or not. $stmt = mysqli_prepare($conn,"SELECT username, primary_website_domain, primary_website_email FROM users WHERE username = ? OR primary_website_domain = ? OR primary_website_email = ?"); mysqli_stmt_bind_param($stmt,'sss', $username, $primary_website_domain, $primary_website_email); mysqli_stmt_execute($stmt); $result = mysqli_stmt_get_result($stmt); $row = mysqli_fetch_array($result, MYSQLI_ASSOC); if (strlen($fb_tos_agreement_reply) < 1 || $fb_tos_agreement_reply !== "Yes") { echo "You must agree to our <a href='tos.html'>Terms & Conditions</a>!"; echo "$fb_tos"; exit(); //Check if inputted Username is already registered or not. } elseif ($row['username'] == $username) { echo "That Username is already registered!"; exit(); //Check if inputted Username is between the required 8 to 30 characters long or not. } elseif (strlen($username) < 8 || strlen($username) > 30) { echo "Username has to be between 8 to 30 characters long!"; exit(); //Check if both inputted Email Accounts match or not. } elseif ($primary_website_email_account != $primary_website_email_account_confirmation) { echo "Email Accounts don't match!"; //exit(); //Check if both inputted Email Domains match or not. } elseif ($primary_website_email_domain != $primary_website_email_domain_confirmation) { echo "Email Domains don't match!"; //exit(); //Check if inputted Email is valid or not. } elseif (!filter_var($primary_website_email, FILTER_VALIDATE_EMAIL)) { echo "Invalid Email! Your Email Domain must match your Domain Name! Insert your real Email (which is under your inputted Domain) for us to email you your 'Account Acivation Link'."; //Check if inputted Email is already regsitered or not. } elseif ($row['primary_website_email'] == $primary_website_email) { echo "That Email is already registered!"; exit(); //Check if both inputted Passwords match or not. } elseif ($password != $password_confirmation) { echo "Passwords don't match!"; exit(); //Check if Password is between 8 to 30 characters long or not. } elseif (strlen($password) < 8 || strlen($password) > 30) { echo "Password must be between 8 to 30 characters long!"; exit(); } else { //Insert the User's inputs into Mysql database using Php's Sql Injection Prevention Method "Prepared Statements". $stmt = mysqli_prepare($conn,"INSERT INTO users(username,password,primary_website_domain,primary_website_email,first_name,middle_name,surname,gender,age_range,working_status,profession,account_activation_code,account_activation_status) VALUES (?,?,?,?,?,?,?,?,?,?,?,?,?)"); mysqli_stmt_bind_param($stmt,'ssssssssssssi', $username,$hashed_password,$primary_website_domain,$primary_website_email,$first_name,$middle_name,$surname,$gender,$age_range,$working_status,$profession,$account_activation_code,$account_activation_status); mysqli_stmt_execute($stmt); //Check if User's registration data was successfully submitted or not. if (!$stmt) { echo "Sorry! Our system is currently experiencing a problem registering your account! You may try registering some other time!"; exit(); } else { //Email the Account Activation Link for the User to click it to confirm their email and activate their new account. $to = "$email"; $subject = "Your ".$site_name." account activation details"; $body = nl2br(" ===============================\r\n ".$site_name." \r\n ===============================\r\n From: ".$site_admin_email."\r\n To: ".$email."\r\n Subject: Your ".$subject."\r\n Message: ".$first_name." ".$surname."\r\n You need to click on this following <a href=".$account_activation_link.">link</a> to activate your account.\r\n "); $headers = "From: ".$site_admin_email."\r\n"; if (!mail($to,$subject,$body,$headers)) { echo "Sorry! We have failed to email you your Account Activation details. Please contact the website administrator!"; exit(); } else { echo "<h3 style='text-align:center'>Thank you for your registration!<br /> Check your email $primary_website_email for details on how to activate your account which you just registered.<h3>"; exit(); } } } } } ?> <!DOCTYPE html> <html> <head> <title><?php echo "$social_network_name"; ?> Signup Page</title> </head> <body> <div class ="container"> <?php //Error Messages. if (isset($_SESSION['error']) && !empty($_SESSION['error'])) { echo '<p style="color:red;">'.$_SESSION['error'].'</p>'; } ?> <?php //Session Messages. if (isset($_SESSION['message']) && !empty($_SESSION['message'])) { echo '<p style="color:red;">'.$_SESSION['error'].'</p>'; } ?> <?php //Clear Registration Session. function clear_registration_session() { //Clear the User Form inputs, Session Messages and Session Errors so they can no longer be used. unset($_SESSION['message']); unset($_SESSION['error']); unset($_POST); exit(); } ?> <form method="post" action=""> <p align="left"><h2>Signup Form</h2></p> <div class="form-group"> <p align="left"><label>Username:</label> <input type="text" placeholder="Enter a unique Username" name="username" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['username'])) { echo htmlentities($_POST['username']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Password:</label> <input type="password" placeholder="Enter a new Username" name="password" required [A-Za-z0-9] autocorrect=off></p> </div> <div class="form-group"> <p align="left"><label>Repeat Password:</label> <input type="password" placeholder="Repeat the new Password" name="password_confirmation" required [A-Za-z0-9] autocorrect=off></p> </div> <div class="form-group"> <p align="left"><label>Primary Domain:</label> <input type="text" placeholder="Enter your Primary Domain" name="primary_website_domain" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['primary_website_domain'])) { echo htmlentities($_POST['primary_website_domain']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Repeat Primary Domain:</label> <input type="text" placeholder="Enter your Primary Domain Again" name="primary_website_domain_confirmation" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['primary_website_domain_confirmation'])) { echo htmlentities($_POST['primary_website_domain_confirmation']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Email Account:</label> <input type="text" placeholder="Enter your Email Account" name="primary_website_email_account" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['primary_website_email_account'])) { echo htmlentities($_POST['primary_website_email_account']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Repeat Email Account:</label> <input type="text" placeholder="repeat your Email Account" name="primary_website_email_account_confirmation" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['primary_website_email_account_confirmation'])) { echo htmlentities($_POST['primary_website_email_account_confirmation']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Email Domain:</label> <input type="text" placeholder="Enter your Email Domain" name="primary_website_email_domain" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['primary_website_email_domain'])) { echo htmlentities($_POST['primary_website_email_domain']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Repeat Email Domain:</label> <input type="text" placeholder="Repeat your Email Domain" name="primary_website_email_domain_confirmation" required [A-Za-z0-9] autocorrect=off value="<?php if(isset($_POST['primary_website_email_domain_confirmation'])) { echo htmlentities($_POST['primary_website_email_domain_confirmation']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>First Name:</label> <input type="text" placeholder="Enter your First Name" name="first_name" required [A-Za-z] autocorrect=off value="<?php if(isset($_POST['first_name'])) { echo htmlentities($_POST['first_name']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Middle Name:</label> <input type="text" placeholder="Enter your Middle Name" name="middle_name" required [A-Za-z] autocorrect=off value="<?php if(isset($_POST['middle_name'])) { echo htmlentities($_POST['middle_name']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Surname:</label> <input type="text" placeholder="Enter your Surname" name="surname" required [A-Za-z] autocorrect=off value="<?php if(isset($_POST['surname'])) { echo htmlentities($_POST['surname']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Gender:</label> <input type="radio" name="gender" autocorrect=off value="male" <?php if(isset($_POST['gender'])) { echo 'checked'; }?> required>Male <input type="radio" name="gender" autocorrect=off value="female" <?php if(isset($_POST['gender'])) { echo 'checked'; }?> required>Female</p> </div> <div class="form-group"> <p align="left"><label>Age Range:</label> <input type="radio" name="age_range" value="18-20" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>18-20 <input type="radio" name="age_range" value="21-25" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>21-25 <input type="radio" name="age_range" value="26-30" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>26-30 <input type="radio" name="age_range" value="31-35" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>31-35 <input type="radio" name="age_range" value="36-40" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>36-40 <input type="radio" name="age_range" value="41-45" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>41-45 <input type="radio" name="age_range" value="46-50" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>46-50 <input type="radio" name="age_range" value="51-55" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>51-55 <input type="radio" name="age_range" value="56-60" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>56-60 <input type="radio" name="age_range" value="61-65" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>61-65 <input type="radio" name="age_range" value="66-70" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>66-70 <input type="radio" name="age_range" value="71-75" <?php if(isset($_POST['age_range'])) { echo 'checked'; }?> required>71-75</p> </div> </div> <div class="form-group"> <p align="left"><label>Working Status:</label> <input type="radio" name="working_status" value="Selfemployed" <?php if(isset($_POST['selfemployed'])) { echo 'checked'; }?> required>Selfemployed <input type="radio" name="working_status" value="Employed" <?php if(isset($_POST['employed'])) { echo 'checked'; }?> required>Employed <input type="radio" name="working_status" value="unemployed" <?php if(isset($_POST['unemployed'])) { echo 'checked'; }?> required>Unemployed</p> </div> <div class="form-group"> <p align="left"><label>Profession:</label> <input type="text" placeholder="Enter your Profession" name="profession" required [A-Za-z] autocorrect=off value="<?php if(isset($_POST['profession'])) { echo htmlentities($_POST['profession']); }?>"></p> </div> <div class="form-group"> <p align="left"><label>Agree To Our Terms & Conditions ? :</label> <input type="radio" name="fb_tos_agreement_reply" value="yes" <?php if(isset($_POST['fb_tos_agreement_reply'])) { echo 'checked'; }?> required>Yes <input type="radio" name="fb_tos_agreement_reply" value="No" <?php if(isset($_POST['fb_tos_agreement_reply'])) { echo 'checked'; }?> required>No</p> </div> <p align="left"><button type="submit" class="btn btn-default" name=submit">Register!</button></p> <p align="left"><font color="red" size="3"><b>Already have an account ?</b><br><a href="login.php">Login here!</a></font></p> </form> </div> </body> </html> -

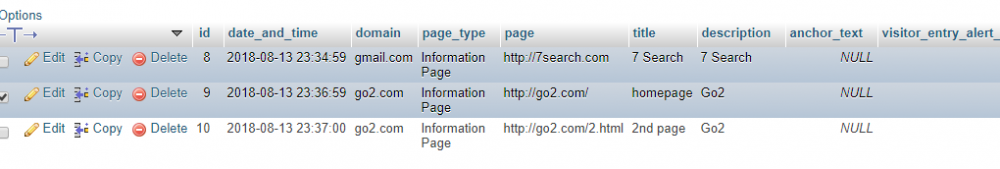

I have error reporting on on one of the included files. Not getting any error. <?php //ERROR REPORTING CODES. declare(strict_types=1); ini_set('display_errors', '1'); ini_set('display_startup_errors', '1'); error_reporting(E_ALL); mysqli_report(MYSQLI_REPORT_ERROR | MYSQLI_REPORT_STRICT); ?> Ok, I will shorten the code in this example below. The mysql queries are not working. if ($_GET["Result_SearchType"] == "Domain") { //Grabbing these: $_GET["Result_Domain"], $_GET["Result_PageType"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_Domain"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND domain = ? LIMIT ?"; $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND domain = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); This Url should pull the data from the db tbl since there atleast one match for the query: http://localhost/test/links_stats.php?Result_SearchType=Domain&Result_PageType=Information20%PAge&Result_Domain=gmail.com&Result_LinksPerPage=25&Result_PageNumber= Look for img in previous post for what my mysql tbl lpooks like.

-

Folks, Why are my $query_1 failing to pull data from mysql db ? I created a condition to get alert if no result is found but I do not get the alert. That means result is found. But if found, then why the following code fails to display or echo the result through html ? Trying to pull the data with these urls: http://localhost/test/links_stats.php?Result_SearchType=Domain&Result_PageType=Information20%Page&Result_Domain=gmail.com&Result_LinksPerPage=25&Result_PageNumber= http://localhost/test/links_stats.php?Result_SearchType=Page&Result_PageType=Information%20Page&Result_Page=http://go2.com/2.html&Result_LinksPerPage=25&Result_PageNumber= http://localhost/test/COMPLETE/links_stats.php?Result_SearchType=Keywords&Result_PageType=Information20%Page&Result_Keywords=go2&Result_LinksPerPage=25&Result_PageNumber= <?php //Required PHP Files. include 'config.php'; include 'header.php'; include 'account_header.php'; if (!$conn) { $error = mysqli_connect_error(); $errno = mysqli_connect_errno(); print "$errno: $error\n"; exit(); } else { ?> <body bgcolor='blue'> <?php //Get the Page Number. Default is 1 (First Page). $Result_PageNumber = $_GET["Result_PageNumber"]; if ($Result_PageNumber == "") { $Result_PageNumber = 1; } $Result_LinksPerPage = $_GET["Result_LinksPerPage"]; if ($Result_LinksPerPage == "") { $Result_LinksPerPage = 1; } $max_result = 100; //$offset = ($Result_PageNumber*$Result_LinksPerPage)-$Result_LinksPerPage; $offset = ($Result_PageNumber-1)*$Result_LinksPerPage; if ($_GET["Result_SearchType"] == "Keywords") { //Grabbing these: $_GET["Result_PageType"],$_GET["Result_Keywords"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_Keywords"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND description = ? LIMIT ?"; $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND description = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); } elseif ($_GET["Result_SearchType"] == "Domain") { //Grabbing these: $_GET["Result_Domain"], $_GET["Result_PageType"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_Domain"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND domain = ? LIMIT ?"; $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND domain = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); } elseif ($_GET["Result_SearchType"] == "PageTitle") { //Grabbing these: $_GET["Result_pageTitle"], $_GET["Result_PageType"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_PageTitle"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND title = ? LIMIT ?"; $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND title = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); } elseif ($_GET["Result_SearchType"] == "Page") { //Grabbing these: $_GET["Result_Page"], $_GET["Result_PageType"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_Page"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND page = ? LIMIT ?"; $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND page = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); } elseif ($_GET["Result_SearchType"] == "PageDescription") { //Grabbing these: $_GET["Result_PageDescription"], $_GET["Result_PageType"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_PageDescription"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND description = ? LIMIT ?"; $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND description = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); } elseif ($_GET["Result_SearchType"] == "AnchorText") { //Grabbing these: $_GET["Result_AnchorText"], $_GET["Result_PageType"]. $first_param = $_GET["Result_PageType"]; $second_param = $_GET["Result_AnchorText"]; echo "$second_param"; $query_1 = "SELECT COUNT(*) FROM submissions_index WHERE page_type = ? AND anchor_text = ? LIMIT ?"; } $stmt_1 = mysqli_prepare($conn,$query_1); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); mysqli_stmt_execute($stmt_1); $result_1 = mysqli_stmt_bind_result($stmt_1,$matching_rows_count); mysqli_stmt_fetch($stmt_1); mysqli_stmt_free_result($stmt_1); $total_pages = ceil($matching_rows_count/$Result_LinksPerPage); $query_2 = "SELECT id,date_and_time,domain,page_type,page,title,description FROM submissions_index WHERE page_type = ? AND anchor_text = ? LIMIT ?"; $stmt_2 = mysqli_prepare($conn,$query_2); mysqli_stmt_bind_param($stmt_1,'ssi',$first_param,$second_param,$Result_LinksPerPage); $result_2 = mysqli_stmt_bind_result($stmt_2,$Result_Id,$Result_LinkSubmissionDateAndTime,$Result_Domain,$Result_PageType,$Result_Page,$Result_PageTitle,$Result_PageDescription); mysqli_stmt_fetch($stmt_2); if(!$stmt_2) { ?> <tr> <td bgcolor="#FFFFFF">No record found! Try another time.</td> </tr> <?php } else { if(($offset+1)<=$max_result) { ?> <p align="left"> <?php echo "<font color='white'><b>LINK SUBMISSION ID:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_Id</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>LINK SUBMISSION DATE & TIME:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_LinkSubmissionDateAndTime</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>DOMAIN:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_Domain</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_Page</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE TYPE:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_PageType</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE TITLE:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_PageTitle</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE DESCRIPTION:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_PageDescription</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE SEARCH STATISTICS:</b></font>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Current&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='gold'><b>Current Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Last&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='silver'><b>Last Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Latest&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='bronze'><b>Latest Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Lost&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='grey'><b>Lost Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Unhappy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='yellow'><b>Unhappy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Happy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='green'><b>Happy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Competing&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='red'><b>Competing Visitors</font></b></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=All&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='blue'><b>All Visitors</font></b></a>"; ?><br> <br> <br> <?php echo "<font color='white'><b>DOMAIN SEARCH STATISTICS:</b></font>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Current&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='gold'><b>Current Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Last&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='silver'><b>Last Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Latest&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='bronze'><b>Latest Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Lost&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='grey'><b>Lost Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Unhappy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='yellow'><b>Unhappy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Happy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='green'><b>Happy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Competing&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='red'><b>Competing Visitors</font></b></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=All&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='blue'><b>All Visitors</font></b></a>"; ?><br> <br> <br> </align> <?php //Use this technique: http://php.net/manual/en/mysqli-stmt.fetch.php while(mysqli_stmt_fetch($stmt_2)) { ?> <p align="left"> <?php echo "<font color='white'><b>LINK SUBMISSION ID:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_Id</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>LINK SUBMISSION DATE & TIME:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_LinkSubmissionDateAndTime</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>DOMAIN:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_Domain</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_Page</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE TYPE:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_PageType</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE TITLE:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_PageTitle</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE DESCRIPTION:</b></font>"; ?><br> <?php echo "<font color='white'><i>$Result_PageDescription</i></font>"; ?><br> <br> <br> <?php echo "<font color='white'><b>PAGE SEARCH STATISTICS:</b></font>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Current&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='gold'><b>Current Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Last&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='silver'><b>Last Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Latest&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='bronze'><b>Latest Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Lost&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='grey'><b>Lost Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Unhappy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='yellow'><b>Unhappy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Happy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='green'><b>Happy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=Competing&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='red'><b>Competing Visitors</font></b></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Keywords&Result_PageType=$Result_PageType&Result_Page=$Result_Page&Result_VisitorType=All&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='blue'><b>All Visitors</font></b></a>"; ?><br> <br> <br> <?php echo "<font color='white'><b>DOMAIN SEARCH STATISTICS:</b></font>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Current&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='gold'><b>Current Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Last&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='silver'><b>Last Visitor</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Latest&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='bronze'><b>Latest Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Lost&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='grey'><b>Lost Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Unhappy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='yellow'><b>Unhappy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Happy&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='green'><b>Happy Visitors</b></font></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=Competing&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='red'><b>Competing Visitors</font></b></a>"; ?><br> <?php echo "<a href=\"visits_stats.php?Result_StatsType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_VisitorType=All&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber=\"><font color='blue'><b>All Visitors</font></b></a>"; ?><br> <br> <br> </align> <tr name="pagination"> <td colspan="10" bgcolor="#FFFFFF"> Result Pages: <?php if($Result_PageNumber<$total_pages) { for($i=1;$i<=$total_pages;$i++) //Show Page Numbers in Serial Order. Eg. 1,2,3. echo "<a href=\"{$_SERVER['PHP_SELF']}?user=$user&Result_SearchType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber={$i}\">{$i}</a> "; } else { for($i=$total_pages;$i>=1;$i--) //Show Page Numbers in Reverse Order. Eg. 3,2,1. echo "<a href=\"{$_SERVER['PHP_SELF']}?user=$user&Result_SearchType=Domain&Result_PageType=$Result_PageType&Result_Domain=$Result_Domain&Result_LinksPerPage=$Result_LinksPerPage&Result_PageNumber={$i}\">{$i}</a> "; } ?> </td> </tr> <?php } } } ?> </table> <br> <br> <p align="center"><span style="font-weight:bold;"><?php echo "Search Result: <br>Searching for ...\"$second_param\" <br>Page Type: $first_param<br> Matches: $matching_rows_count <br>"; ?></span></align> <br> </div> <br> </body> </html>

-

Php Buddies, Look at these 2 updates. They both succeed in fetching the php manual page but fail to fetch the yahoo homepage. Why is that ? The 2nd script is like the 1st one except a small change. Look at the commented-out parts in script 2 to see the difference. The added code comes after the commented-out code part. SCRIPT 1 <?php //HALF WORKING include('simple_html_dom.php'); $url = 'http://php.net/manual-lookup.php?pattern=str_get_html&scope=quickref'; // WORKS ON URL //$url = 'https://yahoo.com'; // FAILS ON URL $curl = curl_init($url); curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt($curl, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0); curl_setopt($curl, CURLOPT_SSL_VERIFYHOST, 0); $response_string = curl_exec($curl); $html = str_get_html($response_string); //to fetch all hyperlinks from a webpage $links = array(); foreach($html->find('a') as $a) { $links[] = $a->href; } print_r($links); echo "<br />"; ?> SCRIPT 2 <?php //HALF WORKING include('simple_html_dom.php'); $url = 'http://php.net/manual-lookup.php?pattern=str_get_html&scope=quickref'; // WORKS ON URL //$url = 'https://yahoo.com'; // FAILS ON URL $curl = curl_init($url); curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt($curl, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0); curl_setopt($curl, CURLOPT_SSL_VERIFYHOST, 0); $response_string = curl_exec($curl); $html = str_get_html($response_string); /* //to fetch all hyperlinks from a webpage $links = array(); foreach($html->find('a') as $a) { $links[] = $a->href; } print_r($links); echo "<br />"; */ // Hide HTML warnings libxml_use_internal_errors(true); $dom = new DOMDocument; if($dom->loadHTML($html, LIBXML_NOWARNING)){ // echo Links and their anchor text echo '<pre>'; echo "Link\tAnchor\n"; foreach($dom->getElementsByTagName('a') as $link) { $href = $link->getAttribute('href'); $anchor = $link->nodeValue; echo $href,"\t",$anchor,"\n"; } echo '</pre>'; }else{ echo "Failed to load html."; } ?> Don't forget my previous post! Cheers!

-

I am told: "file_get_html is a special function from simple_html_dom library. If you open source code for simple_html_dom you will see that file_get_html() does a lot of things that your curl replacement does not. That's why you get your error." Anyway, folks, I really don't wanna be using this limited capacity file_get_html() and so let's replace it with cURL. I tried my best in giving a shot at cURL here. What-about you ? Care to show how to fix this thingY ?

-

UPDATE: I have been given this sample code just now ... Possible solution with str_get_html: $url = 'https://www.yahoo.com'; $curl = curl_init($url); curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt($curl, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0); curl_setopt($curl, CURLOPT_SSL_VERIFYHOST, 0); $response_string = curl_exec($curl); $html = str_get_html($response_string); //to fetch all hyperlinks from a webpage $links = array(); foreach($html->find('a') as $a) { $links[] = $a->href; } print_r($links); echo "<br />"; Gonna experiment with it. Just sharing it here for other future newbies!

-

I just replaced: //$html = file_get_html('http://nimishprabhu.com'); with: $url = 'https://www.yahoo.com'; $curl = curl_init($url); curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt($curl, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0); curl_setopt($curl, CURLOPT_SSL_VERIFYHOST, 0); $html = curl_exec($curl); That is all! That should not result in that error! :eek:

-

Php Buddies, What I am trying to do is learn to build a simple web crawler. So at first, I will feed it a url to start with. It will then fetch that page and extract all the links into a single array. Then it will fetch each of those links pages and extract all their links into a single array likewise. It will do this until it reaches it's max link deep level. Here is how I coded it: <?php include('simple_html_dom.php'); $current_link_crawling_level = 0; $link_crawling_level_max = 2 if($current_link_crawling_level == $link_crawling_level_max) { exit(); } else { $url = 'https://www.yahoo.com'; $curl = curl_init($url); curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt($curl, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0); curl_setopt($curl, CURLOPT_SSL_VERIFYHOST, 0); $html = curl_exec($curl); $current_link_crawling_level++; //to fetch all hyperlinks from the webpage $links = array(); foreach($html->find('a') as $a) { $links[] = $a->href; echo "Value: $value<br />\n"; print_r($links); $url = '$value'; $curl = curl_init($value); curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt($curl, CURLOPT_FOLLOWLOCATION, 1); curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0); curl_setopt($curl, CURLOPT_SSL_VERIFYHOST, 0); $html = curl_exec($curl); //to fetch all hyperlinks from the webpage $links = array(); foreach($html->find('a') as $a) { $links[] = $a->href; echo "Value: $value<br />\n"; print_r($links); $current_link_crawling_level++; } echo "Value: $value<br />\n"; print_r($links); } ?> I have a feeling I got confused and messed it up in the foreach loops. Nestled too much. Is that the case ? Hint where I went wrong. Unable to test the script as I have to first sort out this error: Fatal error: Uncaught Error: Call to a member function find() on string in C:\xampp\h After that, I will be able to test it. Anyway, just looking at the script, you think I got it right or what ? Thanks

-

Ok. I tried another ... <?php /* Using PHP's DOM functions to fetch hyperlinks and their anchor text */ $dom = new DOMDocument; $dom->loadHTML(file_get_contents('https://stackoverflow.com/questions/50381348/extract-urls-anchor-texts-from-links-on-a-webpage-fetched-by-php-or-curl/')); // echo Links and their anchor text echo '<pre>'; echo "Link\tAnchor\n"; foreach($dom->getElementsByTagName('a') as $link) { $href = $link->getAttribute('href'); $anchor = $link->nodeValue; echo $href,"\t",$anchor,"\n"; } echo '</pre>'; ?> I get error: Warning: DOMDocument::loadHTML(): Tag header invalid in Entity, line: 185 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 187 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag g invalid in Entity, line: 187 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 187 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 187 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag ellipse invalid in Entity, line: 187 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 187 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag main invalid in Entity, line: 190 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag section invalid in Entity, line: 191 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag header invalid in Entity, line: 192 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag article invalid in Entity, line: 195 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag footer invalid in Entity, line: 322 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag article invalid in Entity, line: 323 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 325 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 326 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 327 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 328 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 332 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 333 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 334 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 335 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 336 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 337 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag lineargradient invalid in Entity, line: 338 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 339 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 340 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 341 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 342 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 343 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 344 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 346 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag lineargradient invalid in Entity, line: 347 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 348 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 349 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 350 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 351 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 353 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag lineargradient invalid in Entity, line: 354 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 355 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 356 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 358 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag lineargradient invalid in Entity, line: 359 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 360 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 361 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 362 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 363 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag stop invalid in Entity, line: 364 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 366 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 367 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 368 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 369 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag nav invalid in Entity, line: 372 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 374 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 375 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 379 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 380 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 384 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 385 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 389 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 390 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag svg invalid in Entity, line: 394 in C:\xampp\htdocs\cURL\crawler.php on line 7 Warning: DOMDocument::loadHTML(): Tag path invalid in Entity, line: 395 in C:\xampp\htdocs\cURL\crawler.php on line 7 Link Anchor https://fiverr.zendesk.com/hc/en-us/requests/new?ticket_form_id=64087 contact customer support //app.appsflyer.com/id346080608?pid=blockpage //app.appsflyer.com/com.fiverr.fiverr?pid=blockpage //www.linkedin.com/company/fiverr-com //twitter.com/fiverr //www.pinterest.com/fiverr //www.facebook.com/fiverr //www.instagram.com/fiverr Why all these errors ?

-

Bump!

-

As you know, that code in my original post was for scraping all links found on Google homepage. Thanks for the hint. I have worked on it. But facing a little problem. The 1st foreach belongs to the original script to scrape the links from Google homepage. I now added 2 more foreach to scrape the outerhtml and innertext from each link in the hope that one of them 2 would scrape the links' anchor texts. But, I get a blank page now. Here is the code ... <?php # Use the Curl extension to query Google and get back a page of results $url = "http://forums.devshed.com/"; $ch = curl_init(); $timeout = 5; curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_CONNECTTIMEOUT, $timeout); $html = curl_exec($ch); curl_close($ch); # Create a DOM parser object $dom = new DOMDocument(); # Parse the HTML from Devshed Forum. # The @ before the method call suppresses any warnings that # loadHTML might throw because of invalid HTML in the page. @$dom->loadHTML($html); # Iterate over all the <a> tags foreach($dom->getElementsByTagName('a') as $link) { # Show the <a href> echo $link->getAttribute('href'); echo "<br />"; foreach($dom->getElementsByTagName('p') as $outertml) { # Show the <outerhtml> echo $outerhtml->getAttribute('outerhtml'); echo "<br />"; foreach($dom->getElementsByTagName('p') as $innertext) { # Show the <innertext> echo $innertext->getAttribute('innertext'); echo "<br />"; } } } ?> I need the anchor texts of each link on the next line underneath their links. Where am I going wrong here ? Trying to scrape the links from here: http://forums.devshed.com/ Php Development Perl programming C Programming and so on ... Only scraping for learning purpose. Html looks like this: <h2 class="demi-title"><a class="forums" href="http://forums.devshed.com/perl-programming-6/">Perl Programming</a> <span class="viewing instruction">(16 Viewing)</span></h2> </div> <p class="forumdescription">Perl Programming forum discussing coding in Perl, utilizing Perl modules, and other Perl-related topics. Perl, the Practical Extraction and Reporting Language, is the choice for many for parsing textual information.</p> Neither did this work as I still see a blank page. Nothing is getting scraped or echoed: <?php # Use the Curl extension to query Google and get back a page of results $url = "http://forums.devshed.com/"; $ch = curl_init(); $timeout = 5; curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_CONNECTTIMEOUT, $timeout); $html = curl_exec($ch); curl_close($ch); # Create a DOM parser object $dom = new DOMDocument(); # Parse the HTML from Devshed Forum. # The @ before the method call suppresses any warnings that # loadHTML might throw because of invalid HTML in the page. @$dom->loadHTML($html); # Iterate over all the <a> tags foreach($dom->getElementsByTagName('a') as $link) { # Show the <a href> echo $link->getAttribute('href'); echo "<br />"; echo $link->nodeValue; } ?> Regards!

-

Php Buds, Here's the code, using DOM for grabbing links from google: <?php # Use the Curl extension to query Google and get back a page of results $url = "http://www.google.com"; $ch = curl_init(); $timeout = 5; curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_CONNECTTIMEOUT, $timeout); $html = curl_exec($ch); curl_close($ch); # Create a DOM parser object $dom = new DOMDocument(); # Parse the HTML from Google. # The @ before the method call suppresses any warnings that # loadHTML might throw because of invalid HTML in the page. @$dom->loadHTML($html); # Iterate over all the <a> tags foreach($dom->getElementsByTagName('a') as $link) { # Show the <a href> echo $link->getAttribute('href'); echo "<br />"; ?> It echoes results sort of like this: https://www.google.com.com/webhp?tab=ww http://www.google.com.com/imghp?hl=bn&tab=wi http://maps.google.com.com/maps?hl=bn&tab=wl Now, I'd like to convert the above code so again using DOM it is able to extract all urls and their anchor texts from all links residing on any chosen webpage no matter what format the links are in. Formats such as: <a href="http://example1.com">Test 1</a> <a class="foo" id="bar" href="http://example2.com">Test 2</a> <a onclick="foo();" id="bar" href="http://example3.com">Test 3</a> The anchor texts should sit underneath each extracted url. And there should be a line in-between each listed item. Such as: http://stackoverflow.com<br> A programmer's forum<br> <br> http://google.com<br> A searchengine<br> <br> http://yahoo.com<br> An Index<br> <br> And so on. I'd also appreciate another version (not using DOM). This time a cURL version too. Which performs the same result. This cURL (not using DOM) did not exactly work as was intended: <?php /* $curl = curl_init('http://devshed.com/'); curl_setopt($curl, CURLOPT_RETURNTRANSFER, TRUE); $page = curl_exec($curl); if(curl_errno($curl)) // check for execution errors { echo 'Scraper error: ' . curl_error($curl); exit; } curl_close($curl); $regex = '<\s*a\s+[^>]*href\s*=\s*[\"']?([^\"' >]+)[\"' >]'; if ( preg_match($regex, $page, $list) ) echo $list[0]; else print "Not found"; ?> Any chance I can this achieved with cURL (not using DOM) without the regex ? You know I dislike regex and prefer simplicity in coding. Nevertheless, from your end, I'd like to see a regex sample and another sample without regex. Oh by the way, I really prefer not to use limited functions such as the get_file() and the like. You know what I mean. Give us your best shots! Take care! Cheers!

-

Php Gurus, My following code shows all the results of the "notices" tbl. That tbl has columns: id recipient_username sender_username message This code works but it does not use the PREP STMT. I need your help to convert the code so it uses PREP STMT. On this code, all the records are spreadover 10 pages. On the PREP STMT version, I need 10 records spreadover each page. So, if there is 3,000 records then all records would be spreadover 300 pages. NON-PREP STMT CODE $stmt = mysqli_prepare($conn, 'SELECT id, recipient_username, sender_username, message FROM notices WHERE recipient_username=?'); mysqli_stmt_bind_param($stmt, 's', $recipient_username); mysqli_stmt_execute($stmt); //bind result variables mysqli_stmt_bind_result($stmt, $id, $recipient_username, $sender_username, $message); //Get Data from Tbl "notices" $sql = "SELECT * FROM notices"; $result = mysqli_query($conn,$sql); //Total Number of Records $rows_num = mysqli_num_rows($result); //Total number of pages records are spread-over $page_count = 10; $page_size = ceil($rows_num / $page_count); //Get the Page Number, Default is 1 (First Page) $page_number = $_GET["page_number"]; if ($page_number == "") $page_number = 1; $offset = ($page_number -1) * $page_size; $sql .= " limit {$offset},{$page_size}"; $result = mysqli_query($conn,$sql); ?> <table width="1500" border="0" cellpadding="5" cellspacing="2" bgcolor="#666666"> <?php if($rows_num) {?> <tr name="headings"> <td bgcolor="#FFFFFF" name="column-heading_submission-number">Submission Number</td> <td bgcolor="#FFFFFF" name="column-heading_logging-server-date-&-time">Date & Time in <?php $server_time ?></td> <td bgcolor="#FFFFFF" name="column-heading_username">To</td> <td bgcolor="#FFFFFF" name="column-heading_gender">From</td> <td bgcolor="#FFFFFF" name="column-heading_age-range">Notice</td> </tr> <?php while($row = mysqli_fetch_array($result)){ ?> <tr name="user-details"> <td bgcolor="#FFFFFF" name="submission-number"><?php echo $row['id']; ?></td> <td bgcolor="#FFFFFF" name="logging-server-date-&-time"><?php echo $row['date_and_time']; ?></td> <td bgcolor="#FFFFFF" name="username"><?php echo $row['recipient_username']; ?></td> <td bgcolor="#FFFFFF" name="gender"><?php echo $row['sender_username']; ?></td> <td bgcolor="#FFFFFF" name="age-range"><?php echo $row['message']; ?></td> </tr> <?php } ?> <tr name="pagination"> <td colspan="10" bgcolor="#FFFFFF"> Result Pages: <?php if($rows_num <= $page_size) { echo "Page 1"; } else { for($i=1;$i<=$page_count;$i++) echo "<a href=\"{$_SERVER['PHP_SELF']}?page_number={$i}\">{$i}</a> "; } ?> </td> </tr> <?php } else { ?> <tr> <td bgcolor="FFFFFF">No record found! Try another time.</td> </tr> <?php }?> </table> <br> <br> <center><span style="font-weight: bold;"><?php $user ?>Notices in <?php $server_time ?> time.</span></center> <br> <br> </div> <br> </body> </html> The following codes are my attempts to convert the above code to PREP STMT but I see arrays with no records: $query = "SELECT id, recipient_username, sender_username, message FROM notices ORDER by id"; $result = $conn->query($query); /* numeric array */ $row = $result->fetch_array(MYSQLI_NUM); printf ("%s (%s)\n", $row[0], $row[1]); /* associative array */ $row = $result->fetch_array(MYSQLI_ASSOC); printf ("%s (%s)\n", $row["id"], $row["recipient_username"], $row["sender_username"], $row["message"]); /* associative and numeric array */ $row = $result->fetch_array(MYSQLI_BOTH); printf ("%s (%s)\n", $row[0], $row["id"], $row[1], $row["recipient_username"], $row[2], $row["sender_username"], $row[3], $row["message"]); /* free result set */ $result->free(); /* close connection */ $conn->close(); $stmt = "SELECT id,date_and_time,recipient_username,sender_username,message FROM notices WHERE recipient_username = ?"; mysqli_stmt_bind_param($stmt, 's', $username); mysqli_stmt_execute($stmt); $result = mysqli_stmt_get_result($stmt); $row = mysqli_fetch_array($result, MYSQLI_ASSOC); mysqli_stmt_bind_param($stmt, 's', $username); mysqli_stmt_execute($stmt); $result = mysqli_stmt_bind_result($stmt,$db_id,$db_date_and_time,$db_recipient_username,$db_sender_username,$db_message); mysqli_stmt_fetch($stmt); mysqli_stmt_close($stmt); $query = "SELECT id, recipient_username, sender_username, message FROM notices"; if ($stmt = mysqli_prepare($conn, $query)) { //execute statement mysqli_stmt_execute($stmt); //bind result variables mysqli_stmt_bind_result($stmt, $id, $recipient_username, $sender_username, $message); //fetch values while (mysqli_stmt_fetch($stmt)) { printf ("%s (%s)\n", $id, $recipient_username, $sender_username, $message); } //close statement mysqli_stmt_close($stmt); } close connection mysqli_close($conn); close connection mysqli_close($conn); $stmt = mysqli_prepare($conn, 'SELECT id, recipient_username, sender_username, message FROM notices WHERE recipient_username=?'); mysqli_stmt_bind_param($stmt, 's', $recipient_username); mysqli_stmt_execute($stmt); $notices_row = array(); mysqli_stmt_bind_result($stmt, $notices_row['id'], $notices_row['recipient_username'], $notices_row['sender_username'], $notices_row['message']); while (mysqli_stmt_fetch($stmt)) { echo '<p>' . $notices_row['recipient_username'] . '</p>'; } I give-up. They all show results like the following, even though the tbl rows have data: 28 () 29 () 30 (30) If you know of a simpler way (cut down version) for the tbl to display all rows from all columns using PREP STMT using Precedural Style then be my guest to show a sample. Thanks for your helpS.

-

I now get error that variable $url is undefined on line 72. Notice: Undefined variable: url in C:\xampp\htdocs\...... If you check line 72, it says between double quotes: $url = "http://devshed.com"; Even if I change the url to a url who's page cURL is able to fetch, I still get the same error. This does not work either, with single quotes: $url = 'http://devshed.com'; This is very very strange! If the $url variable has not been defined then how is cURL able to fetch the page who's url is on the $url variable value ? Even though page gets fetched, I still see the error! Weird!